Photo courtesy of Portugal’s News

In Sri Lanka, the government recently announced that it would consider enacting laws against the spread of fake and misleading assertions on social media. In light of this development, a public conversation has re-emerged about ‘fake news’, and what the appropriate responses to it may be. A robust understanding of what ‘fake news’ classifies as is needed before we proceed with laws that police ‘fake news’.

‘Fake news’ is part of a broader concept called ‘information disorder’. However, the current classification of ‘information disorder’ fails to provide a practical framework through which false and harmful information can be understood and analysed. This article examines the current classification, and highlights some of its practical weaknesses.

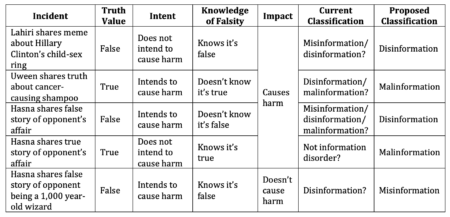

‘Information disorder’ typically refers to the production and dissemination of erroneous information or accurate information shared with an intention to cause harm. This term was proposed in a Council of Europe report on information disorder. It is widely accepted and cited. Information disorder is typically categorised as: (1) misinformation, (2) disinformation, and (3) malinformation. The definition of each category is dependent on two factors: falseness and intent to cause harm. Misinformation is defined as false information that is shared without the intent to cause harm. For example, if Lahiri shares a meme saying that Hillary Clinton ran a pizza-restaurant child-sex ring thinking it is true, it would be considered ‘misinformation’. Disinformation is defined as false information that is knowingly shared with the intent to cause harm. For example, if an ad executive for shampoo, Uween, deliberately fabricates a report that his rival’s shampoo causes cancer, it would be considered ‘disinformation’. Malinformation is defined as accurate information that is shared with the intent to cause harm. For example, if an MP, Hasna, maliciously reveals that her political opponent had an extramarital affair, which happens to be true, it would be considered ‘malinformation’.

The Council of Europe’s system of classification assumes that we have full information about someone’s intent when categorising different cases. The system presumes ‘intent’ based on whether someone knows whether or not the information in question is false. But in practice, it is often impossible to determine the sharer’s exact intent when examining a particular case of information disorder. If we do not know what a person’s intentions were or whether they know if the information is false or not, we cannot classify the information they shared under the existing system.

For example, what if Lahiri knows Hillary Clinton did not run a child-sex ring, but still ironically shares the meme because she thinks it is funny and assumes people who see it will know it is in jest? Can the current classification properly classify the meme? The current classification would probably determine that it isn’t disinformation because Lahiri does not intend to cause harm. However, it’s not necessarily misinformation, because Lahiri knows it is false. What if the shampoo Uween is referring to does actually cause cancer, even though Uween thinks he is fabricating the story? The classification would not consider Uween’s statement disinformation because it’s technically true. But it’s not necessarily malinformation either because Uween thinks it’s false. What if Hasna truly believes her opponent had an extramarital affair, but in reality, it is not true? The classification would not consider it misinformation because Hasna intends to harm. But it is not malinformation because it’s false, nor is it disinformation because Hasna thinks it’s true. Alternatively, what if Hasna’s intent is to make sure the people have access to the truth so that they can make an informed decision? The classification would not consider it malinformation because there is no intention to harm. However, in each of these circumstances, harm may still have been caused. By contrast, what if Hasna tries to encourage prejudice with respect to her opponent’s age by claiming he is a ‘1,000 year-old wizard’, but people instead use her content to talk about his experience and wisdom? The classification would consider it to be disinformation because of Hasna’s ill-intent, despite the lack of discernible harm.

The system of information disorder should categorise information, not conduct. The ambiguities illustrated above demonstrate how the existing categorisation of information disorder evaluates the culpability of the sharer and not the information itself. These ambiguities showcase the current classification’s lack of consistency and rigour. The examples also illustrate how intent and conduct do not always have an impact on the damage done by a piece of false information. A more robust categorisation of information disorder should rely on the falseness and impact of the information itself, instead of focusing on the sharer’s intent.

Imagine an alternative classification system where misinformation is false information that does not cause harm, disinformation is false information that causes harm, and malinformation is accurate information that causes harm. Under this system, Lahiri’s meme about Hillary Clinton would be considered disinformation, and Uween’s shampoo story, which happens to be true, would be malinformation, because regardless of intent, they both cause harm. Similarly, Hasna’s story about her opponent’s affair would be disinformation if it was false, and malinformation if it was true. On the other hand, Hasna’s story about her opponent’s age would be considered misinformation because it does not cause discernible harm.

Democracy requires citizens to form informed opinions and make informed choices. Different types of false and harmful information – what we often call ‘fake news’ – can manipulate those opinions and choices. We need to rethink the existing classification of information disorder so we are better placed to deal with this problem. A coherent classification is particularly vital in countries such as Sri Lanka, where governments have been criticised for excessive responses to the spread of ‘fake news’, and are not trusted to act with restraint. A nebulous or ambiguous classification of information disorder could widen the potential for state abuse of the law to curb individual freedom of expression and silence detractors.

A viable alternative to the existing system should categorise information based on its truth value, and on whether it causes harm. Adopting a more coherent classification would help us respond better to harmful ‘fake news’, and better preserve democracy.