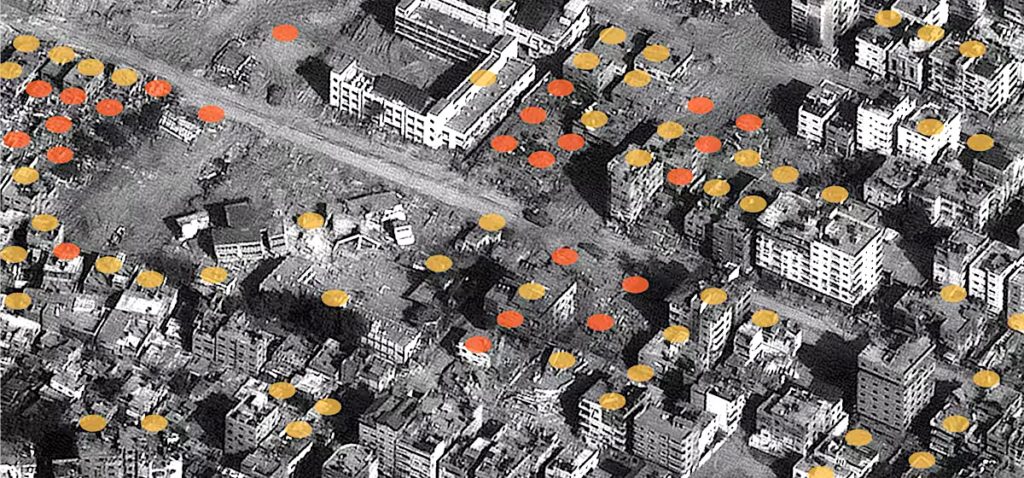

Photo courtesy of The Washington Post

The ongoing conflict in Gaza has become a chilling showcase for the perils of Artificial Intelligence (AI) in warfare. Israel’s deployment of AI-powered systems to generate target lists and guide attacks as documented in harrowing detail by Israeli-Palestinian media outlets exposes the terrifying reality of algorithms deciding who lives and dies. This isn’t a future dystopian scenario; it is happening now with devastating consequences for Palestinian civilians. The implications of this AI-driven warfare extend far beyond Gaza, serving as a warning for marginalized communities and the Global South, regions increasingly vulnerable to becoming the testing grounds for these emerging technologies.

The dystopian AI kill chain

Investigative journalists reported on how the Israeli Defence Forces (IDF) is utilizing AI to streamline the “kill chain” – the process of identifying, tracking and eliminating targets. While the IDF maintains that these AI systems are simply “auxiliary tools” designed to assist human operators, the testimonies reveal a disturbing reliance on algorithmic decision making, often with minimal human oversight and a shocking disregard for civilian lives.

As revealed by an extensive report from +972 Magazine, at the heart of this automated kill chain sits Lavender, an AI system designed to generate target lists of suspected militants by sifting through the vast troves of data collected through Israel’s pervasive surveillance network in Gaza. Lavender analyses a wide range of personal data – social media activity, phone records, physical location and even membership in WhatsApp groups – to determine an individual’s likelihood of being a Hamas operative. The system flags individuals based on perceived suspicious characteristics including their social connections, communication patterns, movements and physical proximity to known militants.

According to Israeli officers, Lavender makes “errors” in approximately 10 percent of cases. The criteria used to label someone a militant are shockingly broad, often based on the faulty assumption that being a male of fighting age, living in certain areas of Gaza or exhibiting specific communication patterns is sufficient evidence to warrant suspicion and even lethal action. To further expedite the automated kill chain, the IDF utilizes a system called “Where’s Daddy?” which geographically tracks these individuals, notifying operators when they enter their family residences. This system, relying heavily on cell phone location data and potentially supplemented by facial recognition technology and other surveillance tools, enables the IDF to carry out mass assassinations by bombing private homes, often at night, knowing that families are likely present. This deliberate targeting of individuals in their homes, where the presence of civilians is almost guaranteed, raises grave concerns about the IDF’s adherence to the fundamental principles of distinction and precaution in international humanitarian law.

The inherent inaccuracy of relying solely on mobile phone data to pinpoint the location of a specific individual at a given time, as pointed out by Human Rights Watch, further raises the risk of deadly mistakes and unintended civilian harm. The use of unguided munitions or “dumb bombs,” in many of these strikes adds another layer of imprecision and lethality. While less expensive than “smart” precision guided munitions, dumb bombs are inherently indiscriminate, often causing significant collateral damage. The chilling fact that the IDF opted to use dumb bombs against “unimportant” targets reflects a callous disregard for civilian lives and raises the question of whether cost effectiveness is being prioritized over human life.

AI, ethics and the illusion of virtuous war

The testimonies from Israeli intelligence officers reveal a disturbing shift in the IDF’s approach to targeting marked by an alarming disregard for the principles of distinction, proportionality and precaution enshrined in international humanitarian law. The willingness to accept pre-authorized collateral damage ratios, particularly when targeting individuals flagged by Lavender, reflects a dangerous devaluation of civilian lives. Intelligence officers described being permitted to kill up to 15 or 20 civilians in airstrikes on low ranking militants while in strikes against senior Hamas commanders, the authorization for civilian casualties could reach over 100. These ratios, far exceeding what other militaries such as the US have historically deemed acceptable as noted in recent research, expose a chilling disregard for the principle of proportionality – the fundamental requirement that the expected civilian harm from an attack must not be excessive in relation to the anticipated military advantage gained.

Human Rights Watch highlights the potential for “automation bias” to further exacerbate this disregard for civilian lives. This cognitive bias, which leads individuals to place excessive trust in the outputs of automated systems, could result in a reduced level of scrutiny over AI-generated target recommendations, potentially bypassing critical human judgment and ethical considerations. The “black box” problem further obscures the inner workings of systems like Lavender, making it difficult to understand how the system reaches its conclusions, identify potential biases or hold actors accountable for its outcomes. The inherent limitations of AI systems, particularly their susceptibility to bias and their inability to adapt to the unpredictable nature of conflict, challenge the notion that these technologies can somehow make war more ethical. The claim that AI can make warfare more “ethical” relies on the faulty assumption that technology can somehow be divorced from its human creators and operators. The reality is that AI systems inherit the biases of the data they are trained on, as well as the political and strategic objectives of those who deploy them. The case of Lavender, far from reducing harm to civilians, has enabled widespread death and destruction. This underscores the dangers of techno-solutionism – the belief that technology alone can solve complex problems – and highlights the urgent need for robust ethical and legal frameworks to govern the development and use of AI in warfare.

The Global South: A vulnerable frontier in the age of AI warfare

The alarming reality of AI-driven warfare in Gaza holds particularly grave implications for the Global South. This region, often lacking the resources and expertise to develop sophisticated AI systems, is highly vulnerable to becoming the testing ground for these emerging and often untested technologies. Many countries in the Global South could find themselves at the mercy of more technologically advanced nations eager to deploy and test these weapons, further exacerbating existing power imbalances and inequalities.

The “global arms race” in AI further compounds this vulnerability. As major powers compete for dominance in this new technological frontier, developing nations risk being left behind, unable to afford the vast investments needed to develop and deploy their own AI systems. This technological disparity could further erode their autonomy and sovereignty, leaving them dependent on powerful states for their security while simultaneously susceptible to manipulation and exploitation. Concerns about this dynamic are already surfacing even among technologically advanced nations in the Global South. For example, Singapore, despite its emphasis on “responsible, safe, reliable, and robust AI development,” has expressed anxieties about the destabilizing potential of an escalating AI arms race in the region.

The proliferation of drones and AI technologies beyond major powers adds another layer of complexity and risk. As evidenced by their use in conflicts such as Myanmar, Ukraine and Syria, both non-state actors and governments are increasingly utilizing commercially available drones, often equipped with AI capabilities, to gain a tactical advantage in conflict. This has the potential to significantly alter the dynamics of asymmetric warfare, creating new challenges for even well-equipped militaries. In Myanmar, for example, pro-democracy forces have effectively utilized commercially available drones to counter the military junta’s. This example demonstrates the potential for this technology to shift the balance of power in unexpected ways. However, as these technologies become more accessible, the risks of misuse and proliferation increase, particularly in regions with weak governance and ongoing conflicts. The potential for AI-powered drones to be used for extrajudicial killings, targeted assassinations or even ethnic cleansing, as we have seen with the IDF’s use of AI, is a chilling prospect.

The international arms industry, driven by powerful economic incentives, plays a significant role in pushing AI-driven military technologies into the Global South. The increasing commercialization of “ethics” in AI, marketed as an objective tool to reduce uncertainty, further normalizes the development and deployment of these systems, presenting them as solutions to complex ethical dilemmas rather than potentially exacerbating them. For instance, companies such as Anduril and Elbit Systems, already heavily involved in Australia’s military buildup, actively market their wares to developing nations, often with little regard for the ethical implications or potential for misuse. This creates a dangerous situation where countries in the Global South, eager to enhance their security or gain a tactical edge in regional conflicts, could be lured into acquiring AI systems that are untested, lack adequate safeguards or are inherently susceptible to bias and discrimination, further endangering their already vulnerable populations.

History offers countless examples of how the Global South has served as a testing ground for new and often deadly military technologies, leaving a lasting legacy of suffering and environmental destruction. From Agent Orange in Vietnam and cluster bombs in Laos and Cambodia, to depleted uranium munitions in Iraq and Kosovo, to the widespread use of landmines, the human cost has been immense. The testing of nuclear weapons in the Pacific Islands inflicted devastating and long lasting environmental damage on these vulnerable communities, exposing them to high levels of radiation and displacing entire populations. This pattern of exploitation continues today with the “war on terror’s” surge in drone strikes across the Middle East and Africa, from Yemen and Somalia to Pakistan and Mali. These drone strikes, often justified as precise attacks against “terrorists,” have resulted in countless civilian casualties, fuelling further instability and raising grave concerns about the erosion of international law. AI-powered warfare, with its inherent risks and potential for unintended consequences, threatens to perpetuate this cycle of violence and dehumanization. The unchecked proliferation of commercially available AI-powered surveillance technologies, often developed and marketed by companies based in the Global North, presents a particular threat. These technologies, if deployed with limited oversight or accountability, could be used to solidify authoritarian rule, suppress dissent, and perpetuate cycles of violence and human rights abuses.

The case of Gaza, with its harrowing accounts of AI-driven targeting and its devastating consequences for Palestinian civilians, serves as a stark warning for the Global South. The unchecked pursuit of “efficient” killing, facilitated by algorithms and fueled by a dangerous faith in technological “objectivity,” has resulted in the deaths of thousands of Palestinian civilians. The development and deployment of AI in warfare, coupled with the commercial interests of the arms industry and the geopolitical ambitions of powerful states, could usher in a new era of technological dominance and oppression, further marginalizing and endangering already vulnerable communities.